Server Setup: Nvidia Tesla P100 Hosting Environment

Before diving into the Ollama P100 benchmark, let's review the test setup:

Server Configuration:

- Price: $159~199/month

- CPU: Dual 10-Core Xeon E5-2660v2

- RAM: 128GB DDR3

- Storage: 120GB NVMe + 960GB SSD

- Network: 100Mbps Unmetered

- OS: Windows 11 Pro

GPU Details:

- GPU: Nvidia Tesla P100

- Compute Capability: 6.0

- Microarchitecture: Pascal

- CUDA Cores: 3584

- Memory: 16GB HBM2

- FP32 Performance: 9.5 TFLOPS

This configuration provides ample RAM and storage for smooth model loading and execution, while the P100's 16GB VRAM enables running larger models compared to the RTX2060 Ollama benchmark we previously conducted.

Benchmark Results: Running LLMs on Tesla P100 with Ollama

We tested various 7B to 16B models on the Tesla P100 Ollama setup. Here's how they performed:

| Models | deepseek-r1 | deepseek-r1 | deepseek-r1 | deepseek-coder-v2 | llama2 | llama2 | llama3.1 | gemma2 | qwen2.5 | qwen2.5 |

|---|---|---|---|---|---|---|---|---|---|---|

| Parameters | 7b | 8b | 14b | 16b | 7b | 13b | 8b | 9b | 7b | 14b |

| Size(GB) | 4.7 | 4.9 | 9 | 8.9 | 3.8 | 7.4 | 4.9 | 5.4 | 4.7 | 9.0 |

| Quantization | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 |

| Running on | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 | Ollama0.5.11 |

| Downloading Speed(mb/s) | 12 | 12 | 12 | 12 | 12 | 12 | 12 | 12 | 12 | 12 |

| CPU Rate | 3% | 3% | 3% | 3% | 4% | 3% | 3% | 3% | 3% | 3% |

| RAM Rate | 5% | 5% | 5% | 4% | 4% | 4% | 5% | 5% | 5% | 5% |

| GPU UTL | 85% | 89% | 90% | 65% | 91% | 95% | 88% | 81% | 87% | 91% |

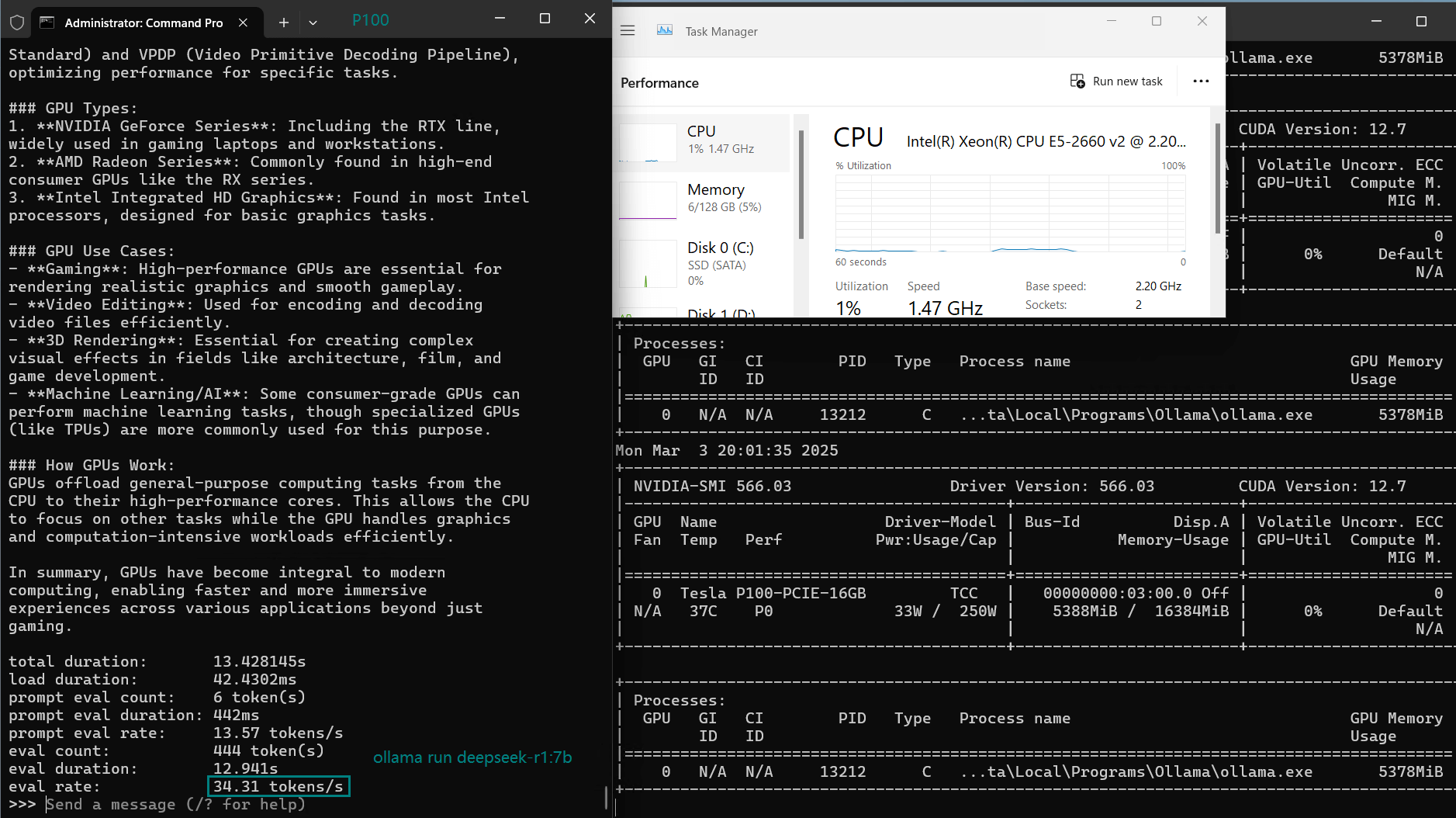

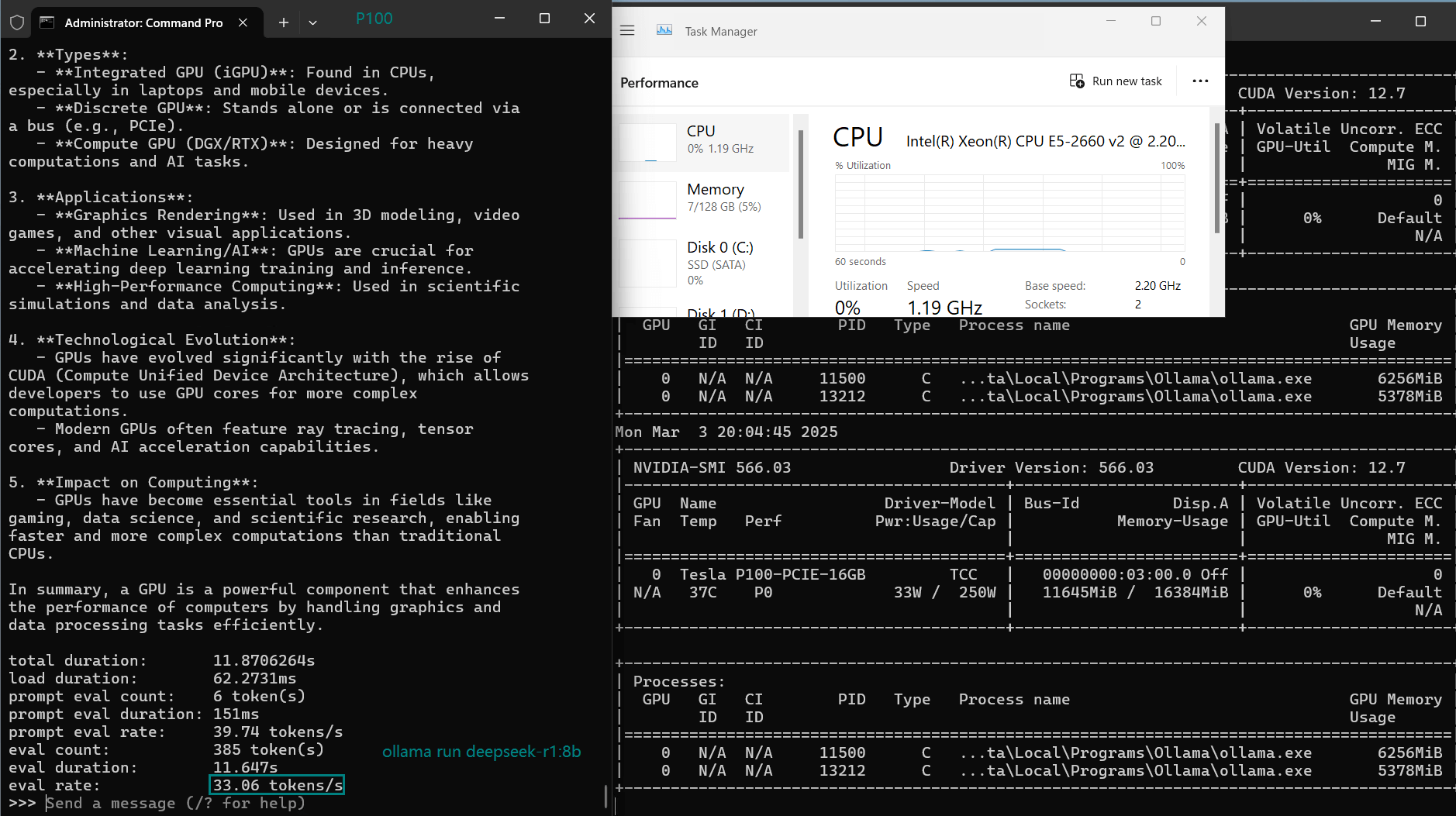

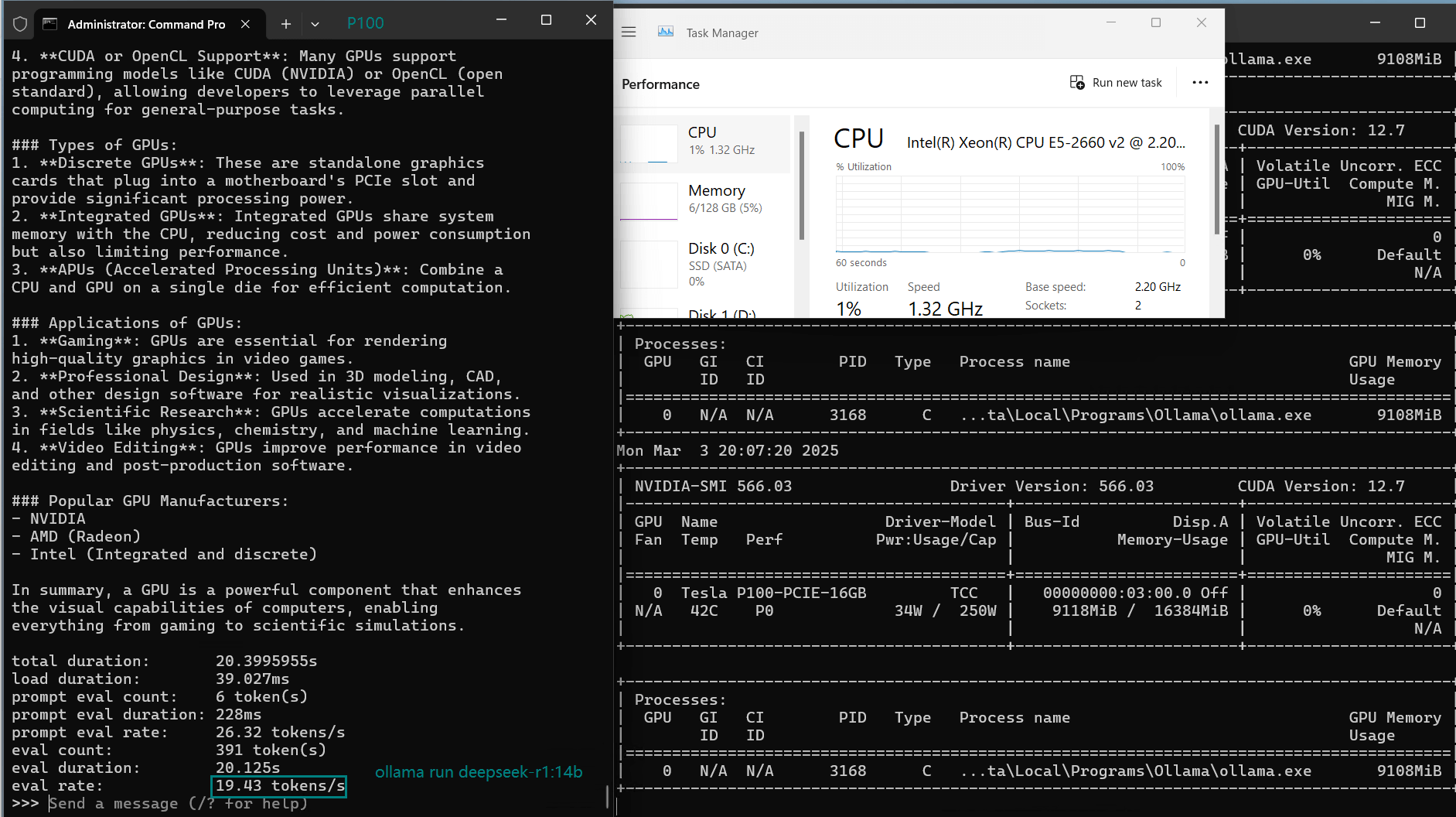

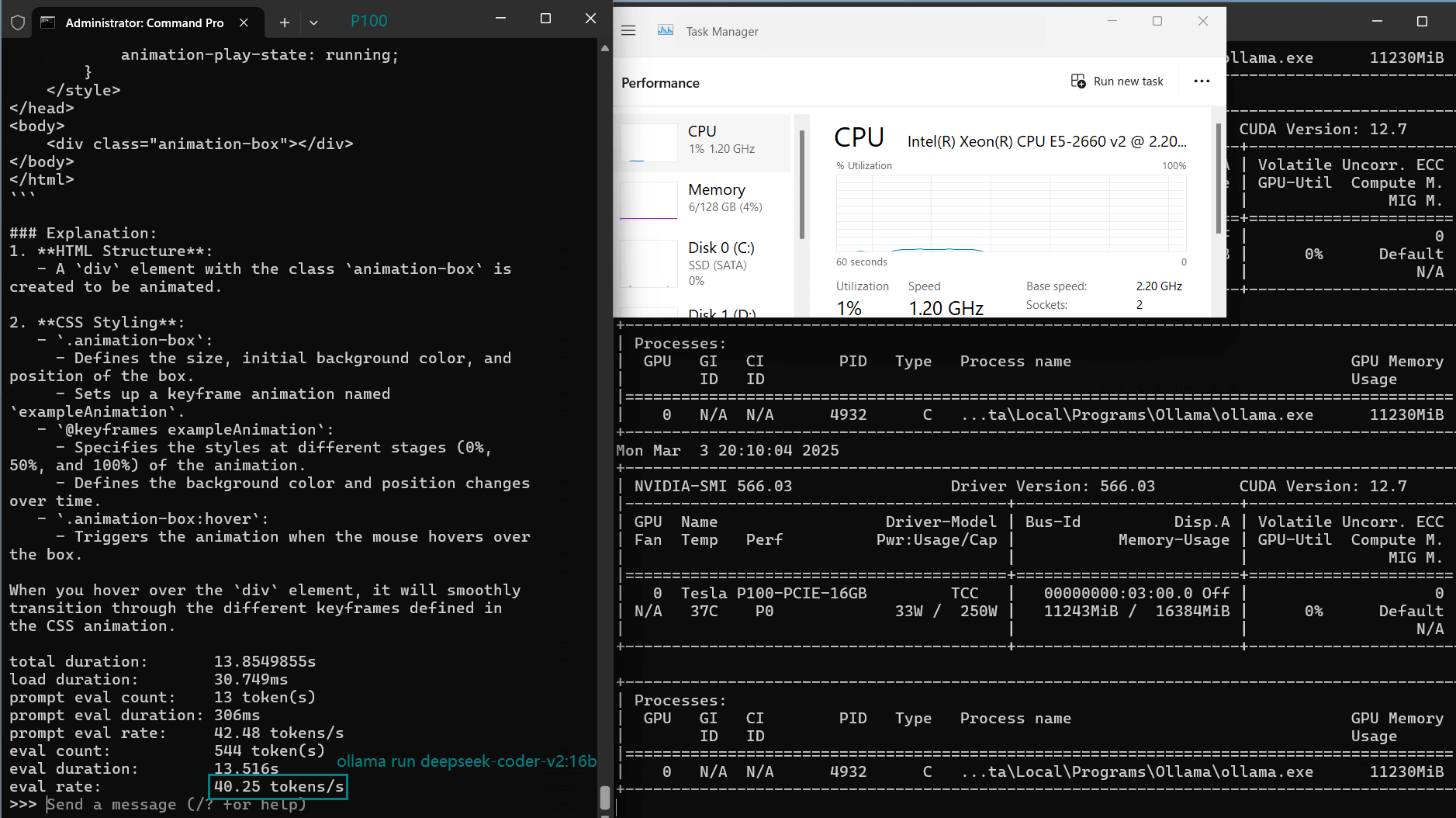

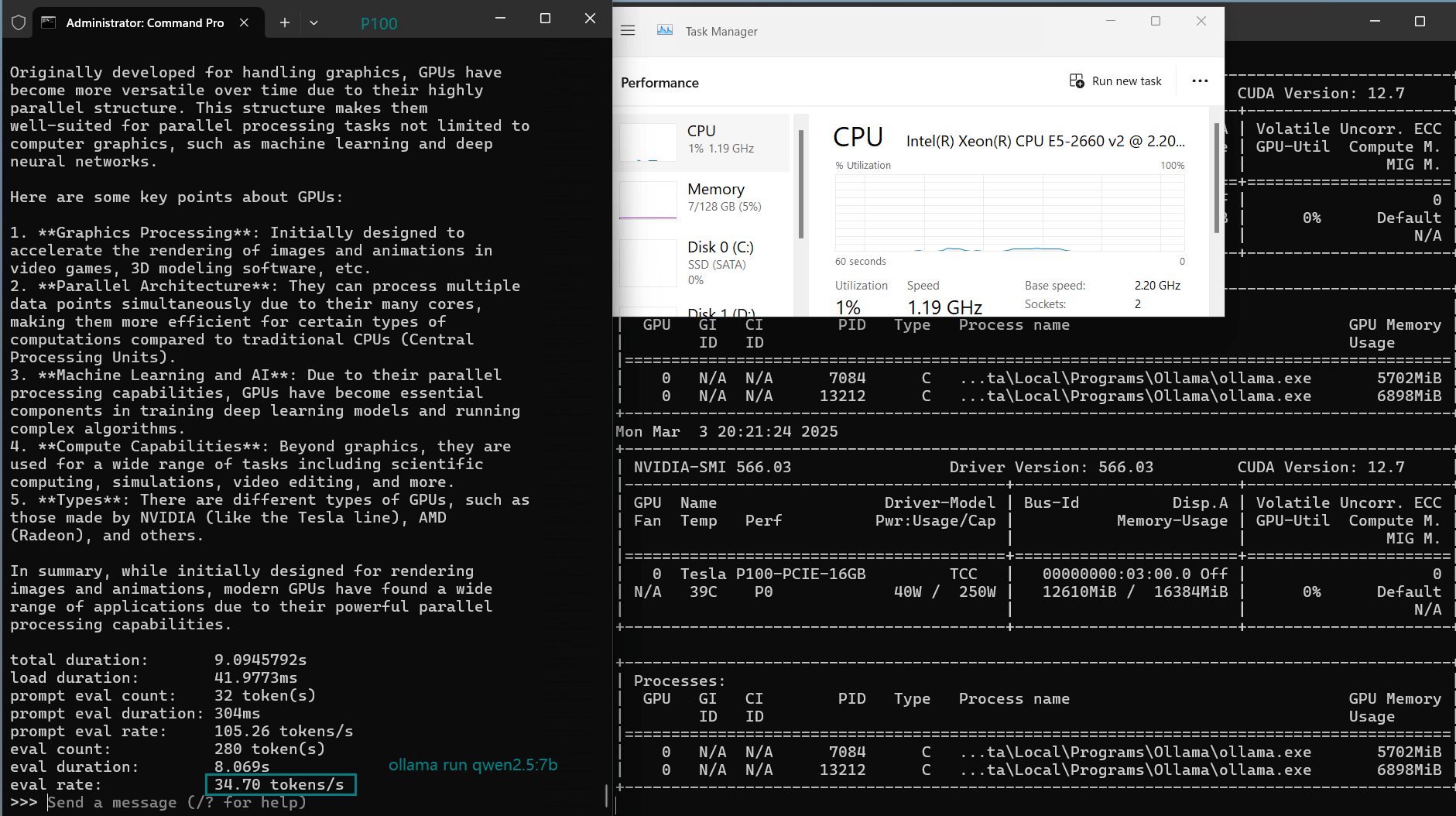

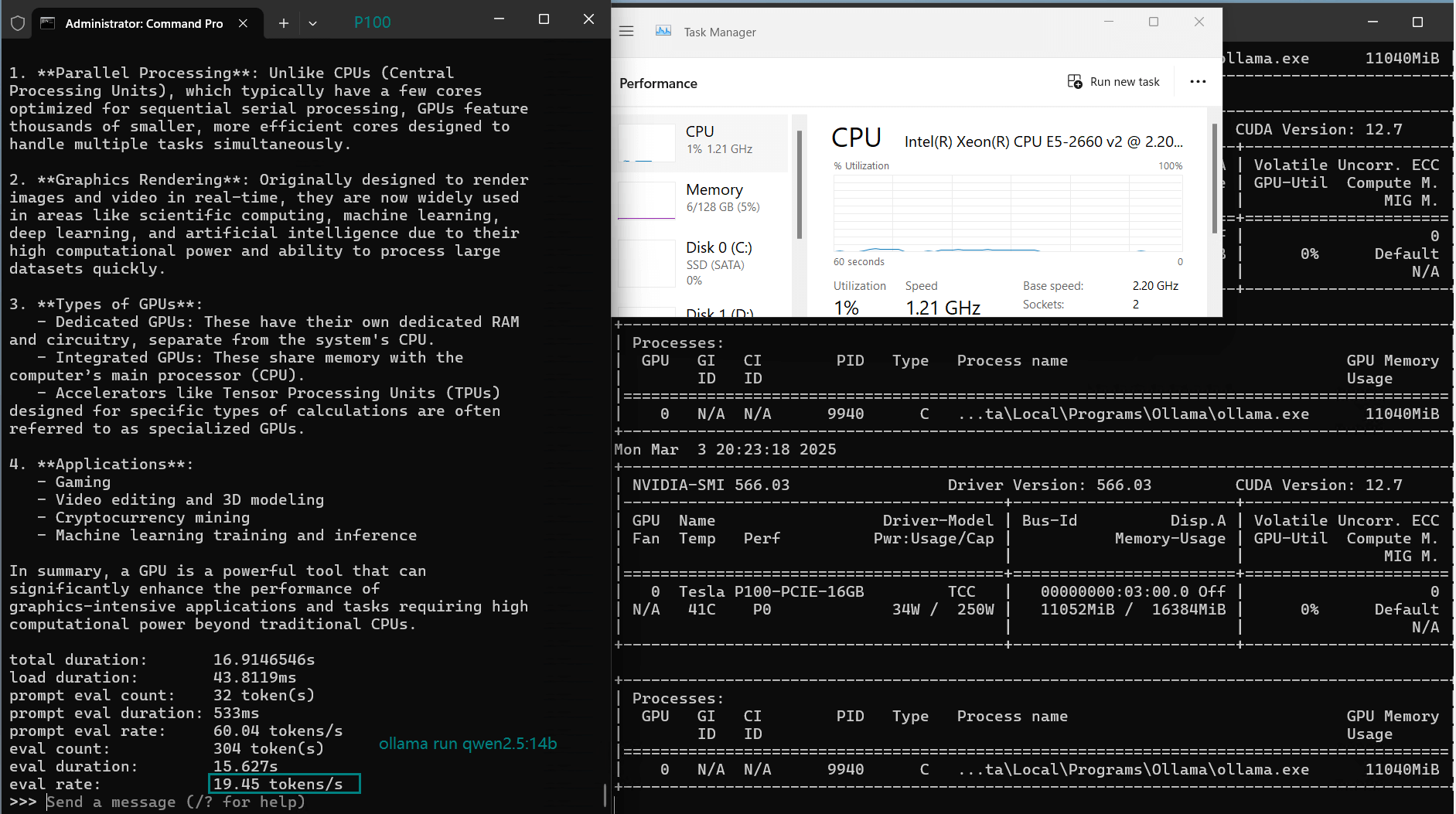

| Eval Rate(tokens/s) | 34.31 | 33.06 | 19.43 | 40.25 | 49.66 | 28.86 | 32.99 | 29.54 | 34.70 | 19.45 |

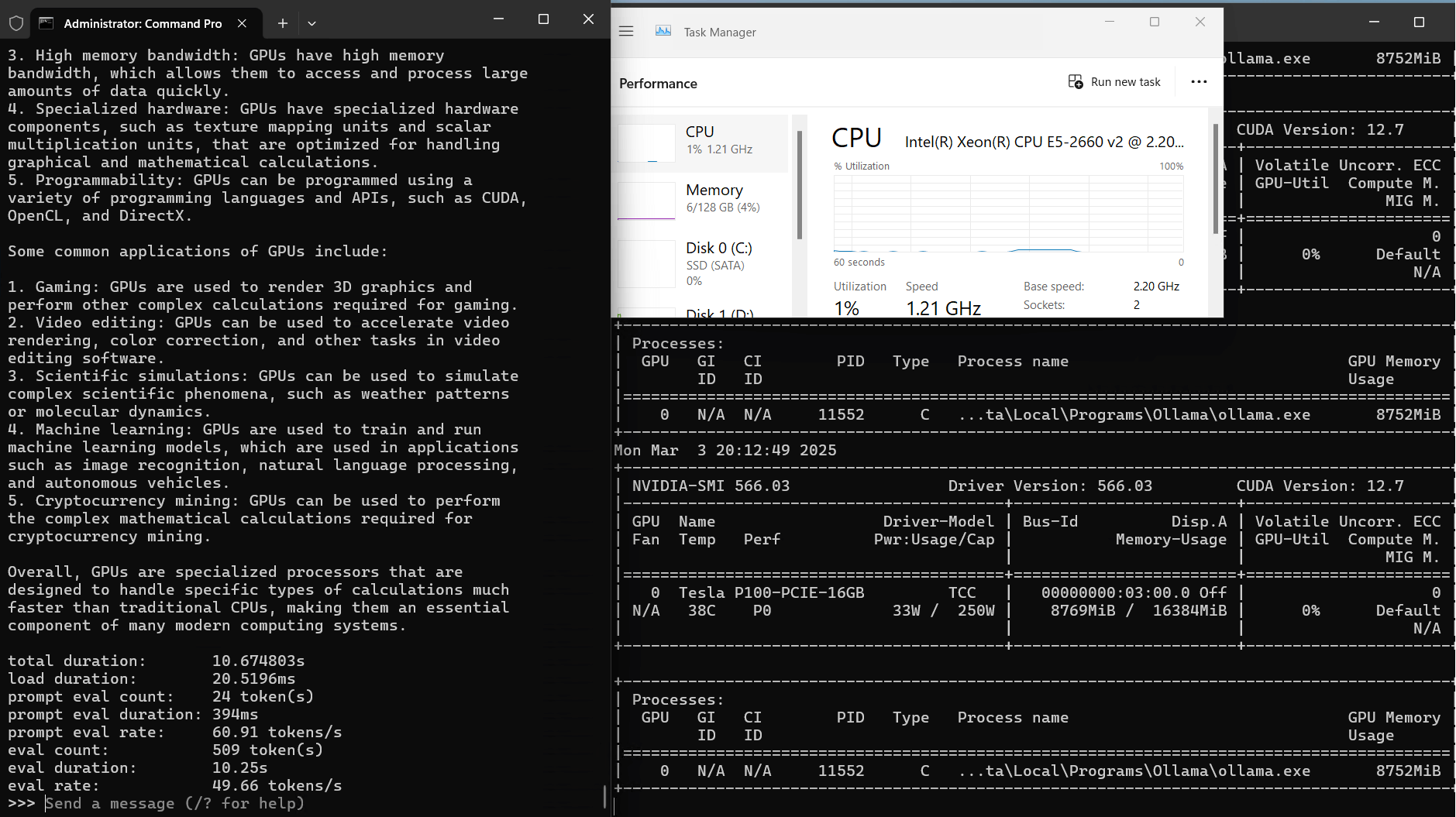

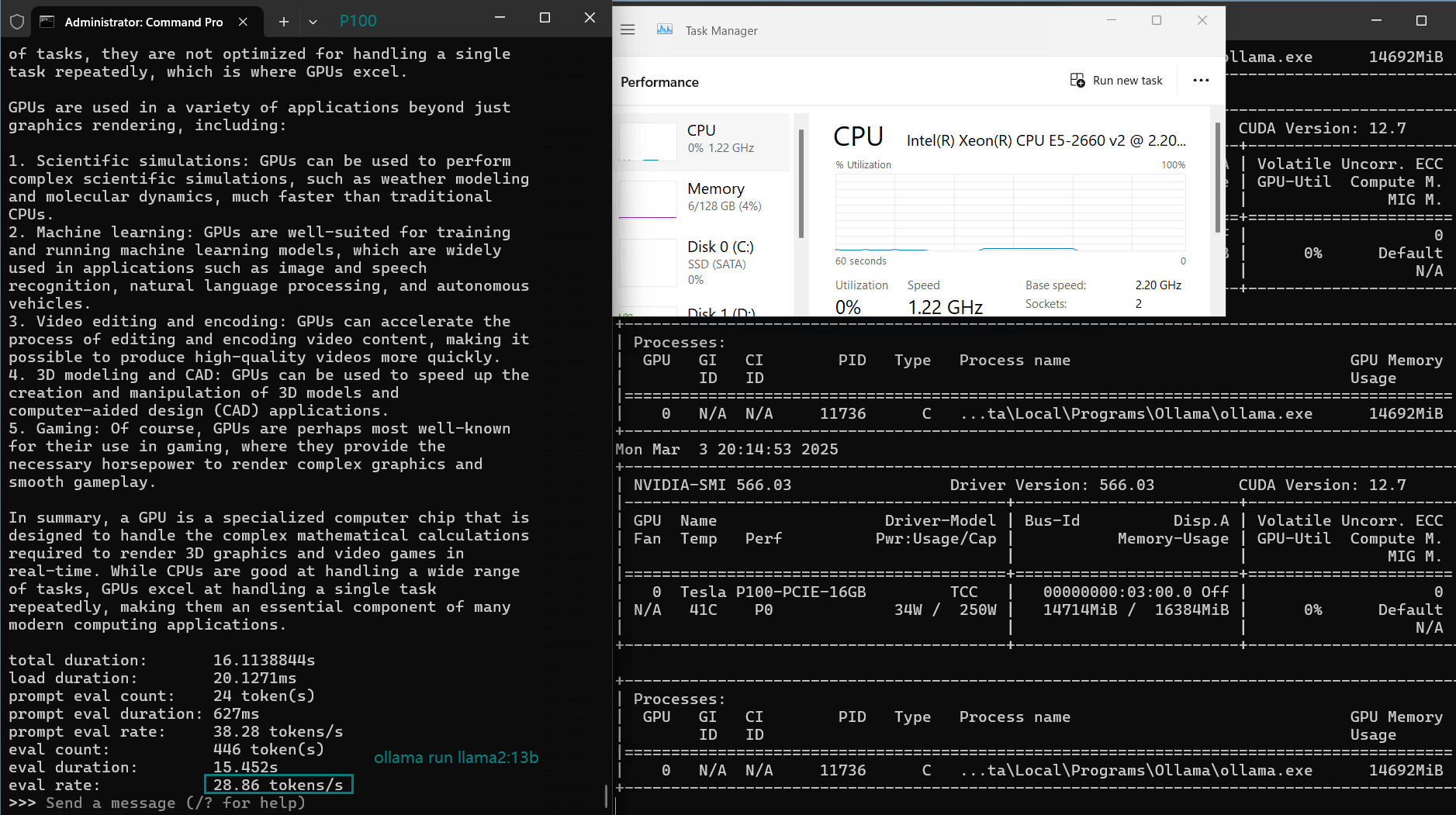

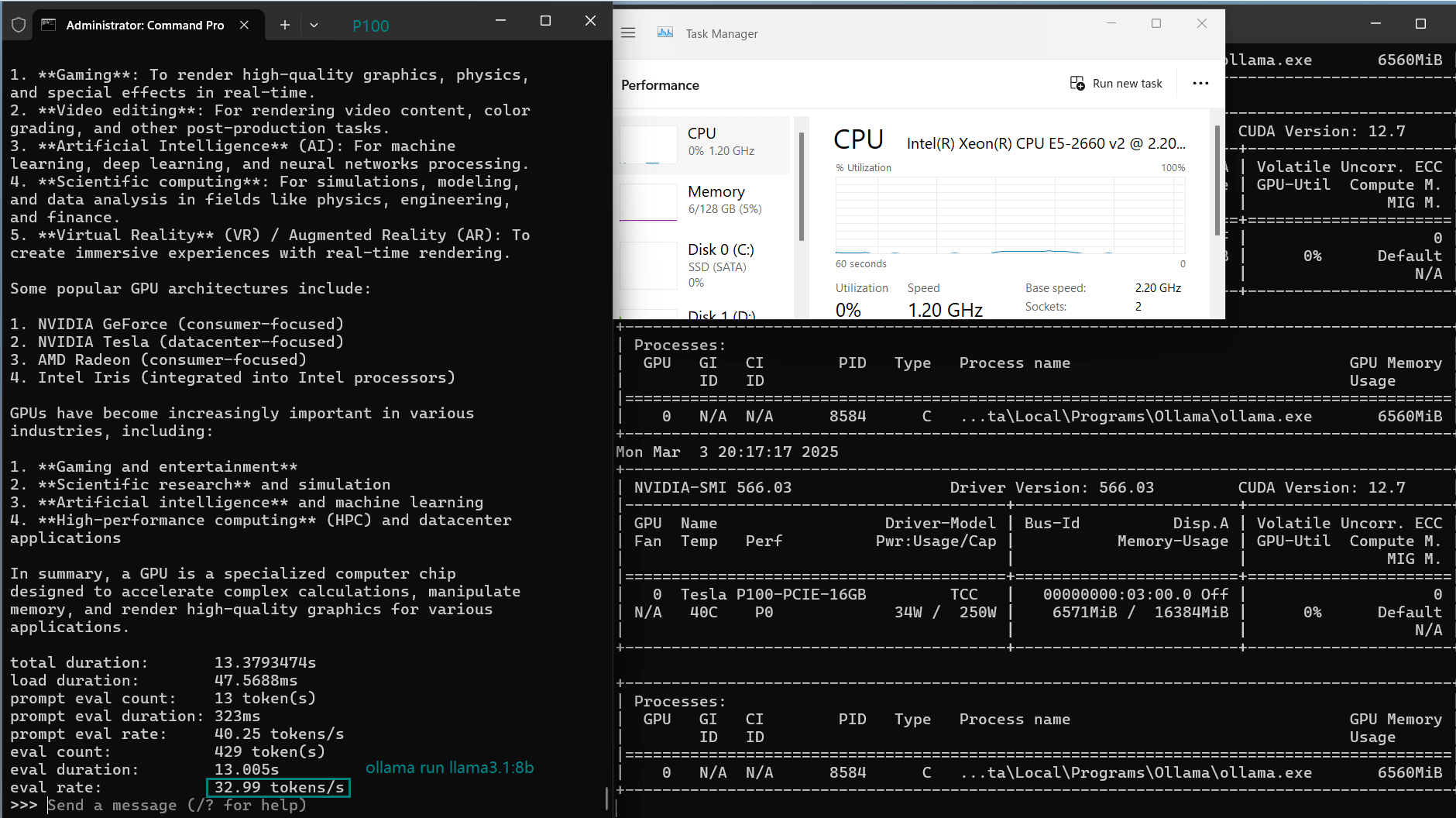

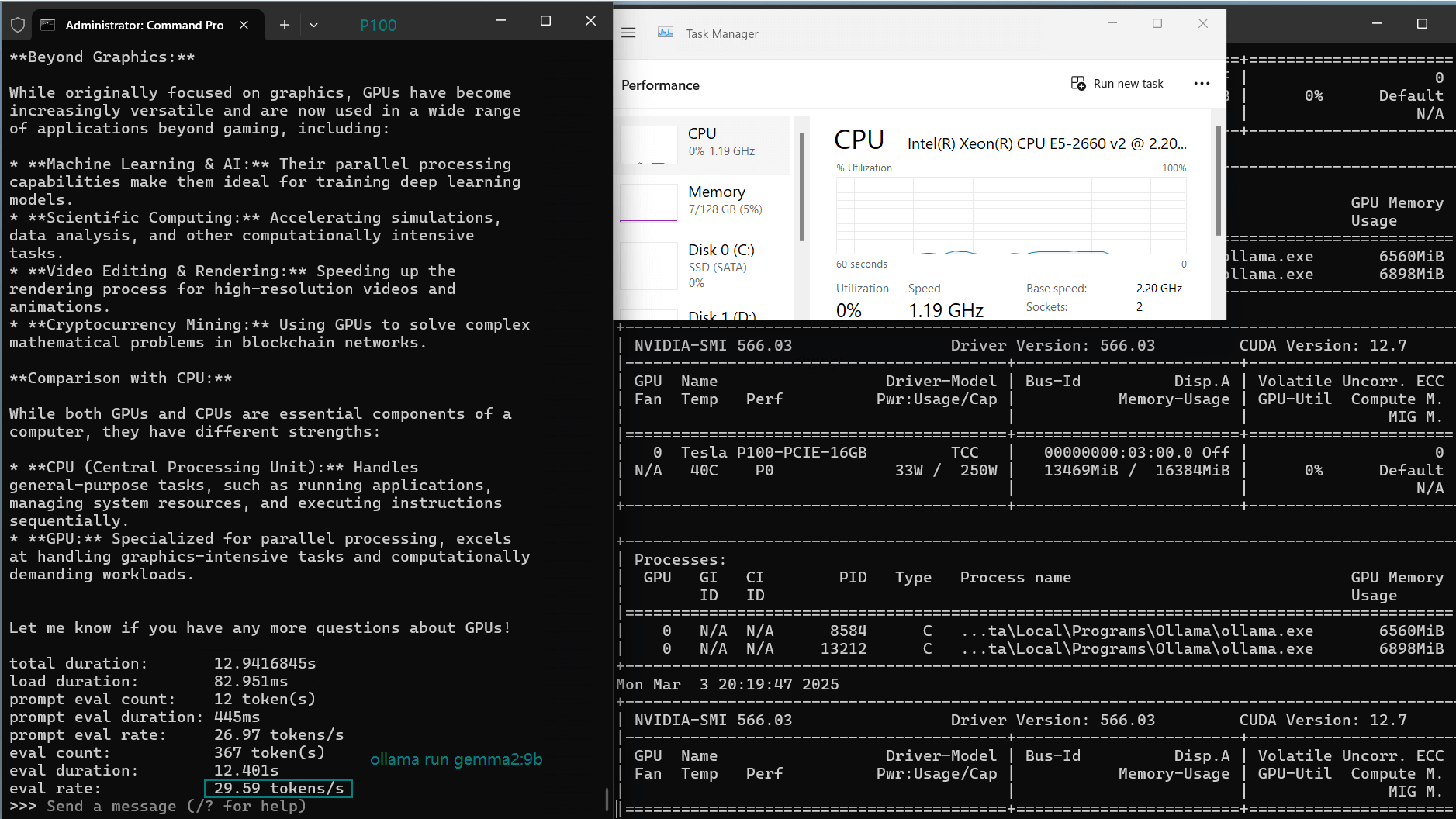

A video to record real-time P100 GPU resource consumption data:

Screen Shoots for Bechmarking LLMs on Ollama with Nvidia P100 GPU Server

Key Takeaways from the Benchmark

- 7B models run best on the Tesla P100 Ollama setup, with Llama2-7B achieving 49.66 tokens/s.

- 14B+ models push the limits—performance drops, with DeepSeek-r1-14B running at 19.43 tokens/s, still acceptable.

- Qwen2.5 and DeepSeek models offer balanced performance, staying between 33–35 tokens/s at 7B.

- Llama2-13B achieves 28.86 tokens/s, making it usable but slower than its 7B counterpart.

- DeepSeek-Coder-v2-16B surprisingly outperformed 14B models (40.25 tokens/s), but lower GPU utilization (65%) suggests inefficiencies.

Is Tesla P100 Good for LLMs on Ollama?

If you're looking for affordable Nvidia P100 hosting for LLM inference, the Tesla P100 is a solid choice for models up to 13B. Compared to the RTX2060 Ollama benchmark, it handles larger models better due to its 16GB VRAM, but 16B models may struggle.

✅ Pros of Using NVIDIA P100 for Ollama

- Handles 7B-13B models efficiently

- Lower cost than A4000 or V100

- Better than RTX2060 for 7B+ LLMs

❌ Limitations of NVIDIA P100 for Ollama

- Struggles with 16B+ models

- Older Pascal architecture lacks Tensor Cores

If you need affordable LLM hosting, renting a Tesla P100 for Ollama inference at $159–199/month offers great value for small to mid-sized AI models.

Get Started with Tesla P100 Hosting for 3-16B LLMs

For those deploying LLMs on Ollama, choosing the right NVIDIA Tesla P100 hosting solution can significantly impact performance and costs. If you're working with 3B-16B models, the P100 is a solid choice for AI inference at an affordable price.

Flash Sale to May 13

Professional GPU Dedicated Server - P100

$ 109.00/mo

45% Off Recurring (Was $199.00)

1mo3mo12mo24mo

Order Now- 128GB RAM

- Dual 10-Core E5-2660v2

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: Nvidia Tesla P100

- Microarchitecture: Pascal

- CUDA Cores: 3584

- GPU Memory: 16 GB HBM2

- FP32 Performance: 9.5 TFLOPS

- Suitable for AI, Data Modeling, High Performance Computing, etc.

Flash Sale to May 13

Professional GPU VPS - A4000

$ 93.75/mo

47% OFF Recurring (Was $179.00)

1mo3mo12mo24mo

Order Now- 32GB RAM

- 24 CPU Cores

- 320GB SSD

- 300Mbps Unmetered Bandwidth

- Once per 2 Weeks Backup

- OS: Linux / Windows 10/ Windows 11

- Dedicated GPU: Quadro RTX A4000

- CUDA Cores: 6,144

- Tensor Cores: 192

- GPU Memory: 16GB GDDR6

- FP32 Performance: 19.2 TFLOPS

- Available for Rendering, AI/Deep Learning, Data Science, CAD/CGI/DCC.

Advanced GPU Dedicated Server - V100

$ 229.00/mo

1mo3mo12mo24mo

Order Now- 128GB RAM

- Dual 12-Core E5-2690v3

- 240GB SSD + 2TB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: Nvidia V100

- Microarchitecture: Volta

- CUDA Cores: 5,120

- Tensor Cores: 640

- GPU Memory: 16GB HBM2

- FP32 Performance: 14 TFLOPS

- Cost-effective for AI, deep learning, data visualization, HPC, etc

Enterprise GPU Dedicated Server - RTX 4090

$ 409.00/mo

1mo3mo12mo24mo

Order Now- 256GB RAM

- Dual 18-Core E5-2697v4

- 240GB SSD + 2TB NVMe + 8TB SATA

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: GeForce RTX 4090

- Microarchitecture: Ada Lovelace

- CUDA Cores: 16,384

- Tensor Cores: 512

- GPU Memory: 24 GB GDDR6X

- FP32 Performance: 82.6 TFLOPS

- Perfect for 3D rendering/modeling , CAD/ professional design, video editing, gaming, HPC, AI/deep learning.

Final Thoughts: Best Models for Tesla P100 on Ollama

For Nvidia P100 rental users, the best models for small-scale AI inference on Ollama are:

- Llama2-7B (Fastest: 49.66 tokens/s)

- DeepSeek-r1-7B (Balanced: 34.31 tokens/s)

- Qwen2.5-7B (Strong alternative: 34.70 tokens/s)

- DeepSeek-Coder-v2-16B (Best large model: 40.25 tokens/s)

For best performance, stick to 7B–13B models. If you need larger LLM inference, consider upgrading to A4000 or V100 GPUs. Would you like to see further benchmarks comparing the Tesla P100 vs. V100 for Ollama? Let us know in the comments!

Tags:

Ollama P100, Tesla P100 LLMs, Ollama Tesla P100, Nvidia P100 hosting, benchmark P100, Ollama benchmark, P100 for 7B-14B LLMs inference, Nvidia P100 rental, Tesla P100 performance, Ollama LLMs, deepseek-r1 P100, Llama2 P100 benchmark, Qwen2.5 Tesla P100, Nvidia P100 AI inference