Test Environment Specifications

USA Server testing environment was configured with the following hardware and software specifications:

Server Configuration:

- CPU: Eight-Core Xeon E5-2690

- RAM: 32GB

- Storage: 120GB SSD + 960GB SSD

- Bandwidth: 100Mbps-1Gbps

- Operating System: Ubuntu 24.0

GPU Details:

- GPU Model: Nvidia Quadro P1000

- Microarchitecture: Pascal

- Compute Capacity: 6.1

- CUDA Cores: 640

- GPU Memory: 4GB GDDR5

- FP32 Performance: 1.894 TFLOPS

Models Tested on Ollama GPU Platform

We tested a variety of models, ranging from smaller models like TinyLlama to more parameter-heavy models like Phi3.5. Here are the models included in the benchmark:

These models were evaluated on Ollama version 0.5.4, utilizing 4-bit quantization for memory efficiency:

- Llama3.2 (1B, 3B parameters)

- Gemma2 (2B parameters)

- CodeGemma (2B parameters)

- Qwen2.5 (0.5B, 1.5B, 3B parameters)

- TinyLlama (1.1B parameters)

- Phi3.5 (3.8B parameters)

Benchmark Results: Ollama GPU P1000 Performance Metrics

Below are the benchmark results obtained when running the models on the P1000 GPU:

| Models | llama3.2 | llama3.2 | gemma2 | codegemma | qwen2.5 | qwen2.5 | qwen2.5 | tinyllama | phi3.5 |

|---|---|---|---|---|---|---|---|---|---|

| Parameters | 1b | 3b | 2b | 2b | 0.5b | 1.5b | 3b | 1.1b | 3.8b |

| Size | 1.3GB | 2GB | 1.6GB | 1.6GB | 395MB | 1.1GB | 1.9GB | 638MB | 2.2GB |

| Quantization | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 |

| Running on | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 | Ollama0.5.4 |

| Downloading Speed(mb/s) | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 |

| CPU Rate | 6.7% | 6.3% | 6.3% | 6.3% | 6.5% | 6.3% | 6.3% | 6.4% | 6.4% |

| RAM Rate | 4.5% | 4.8% | 4.9% | 5.0% | 5.4% | 5.4% | 4.0% | 4.0% | 4.2% |

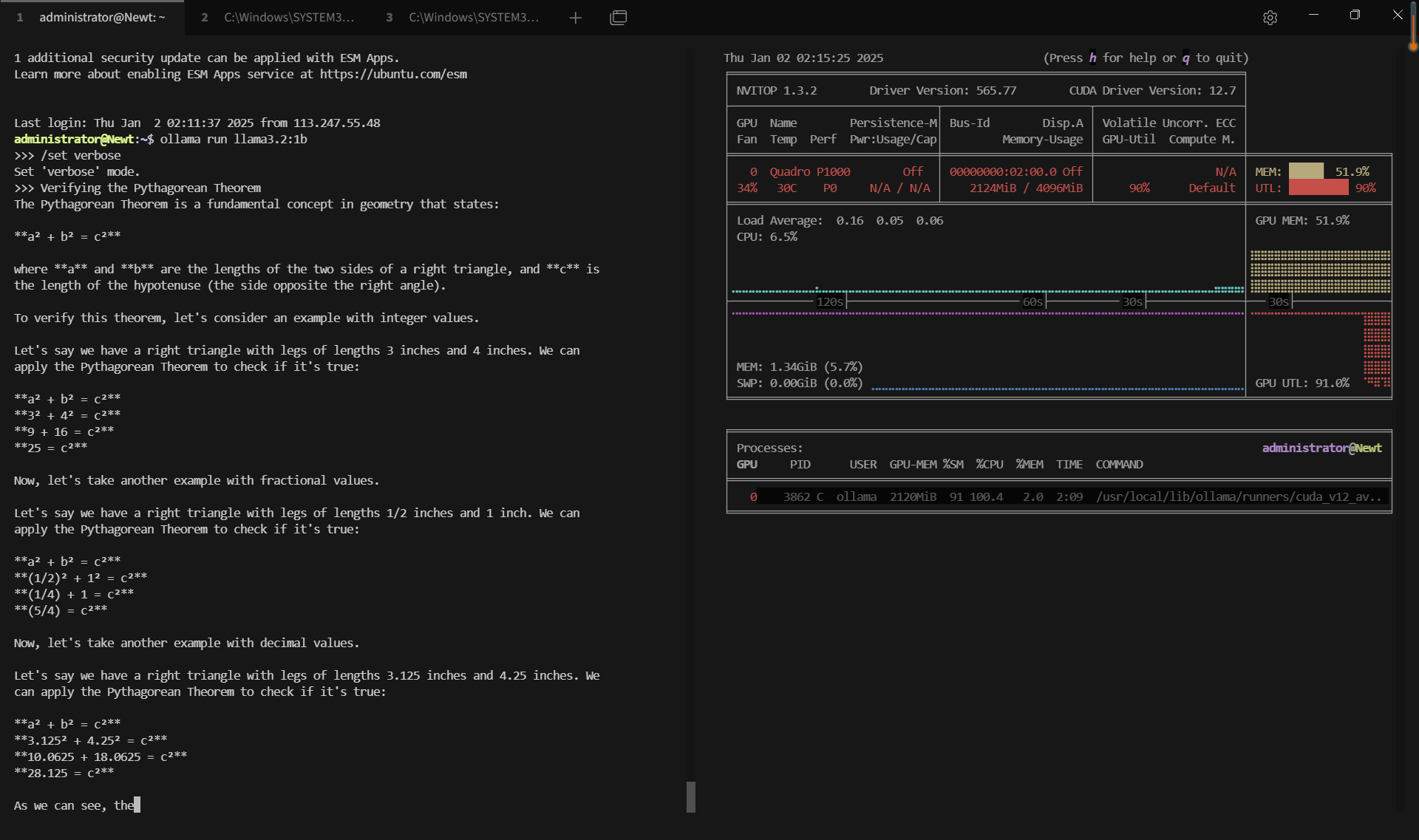

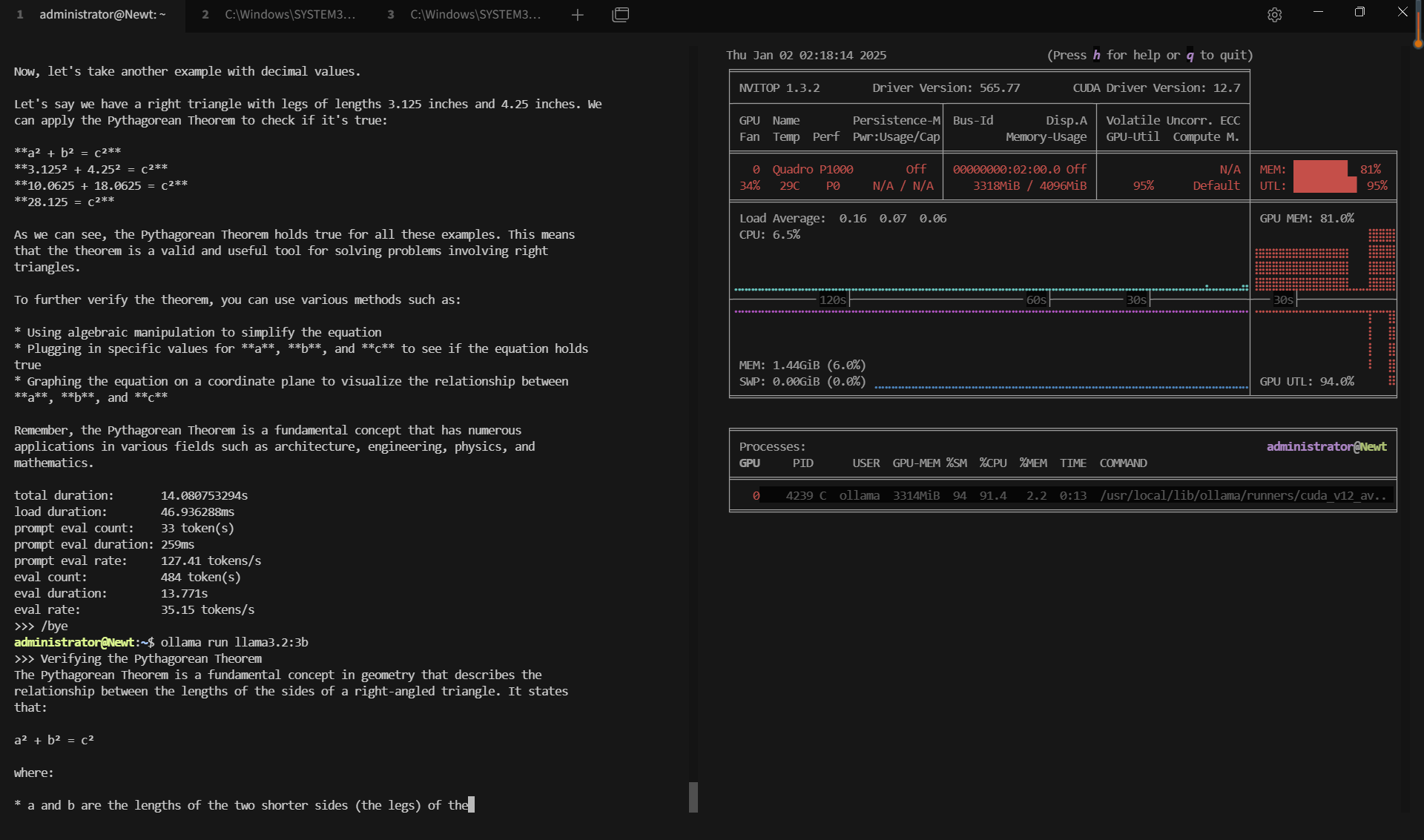

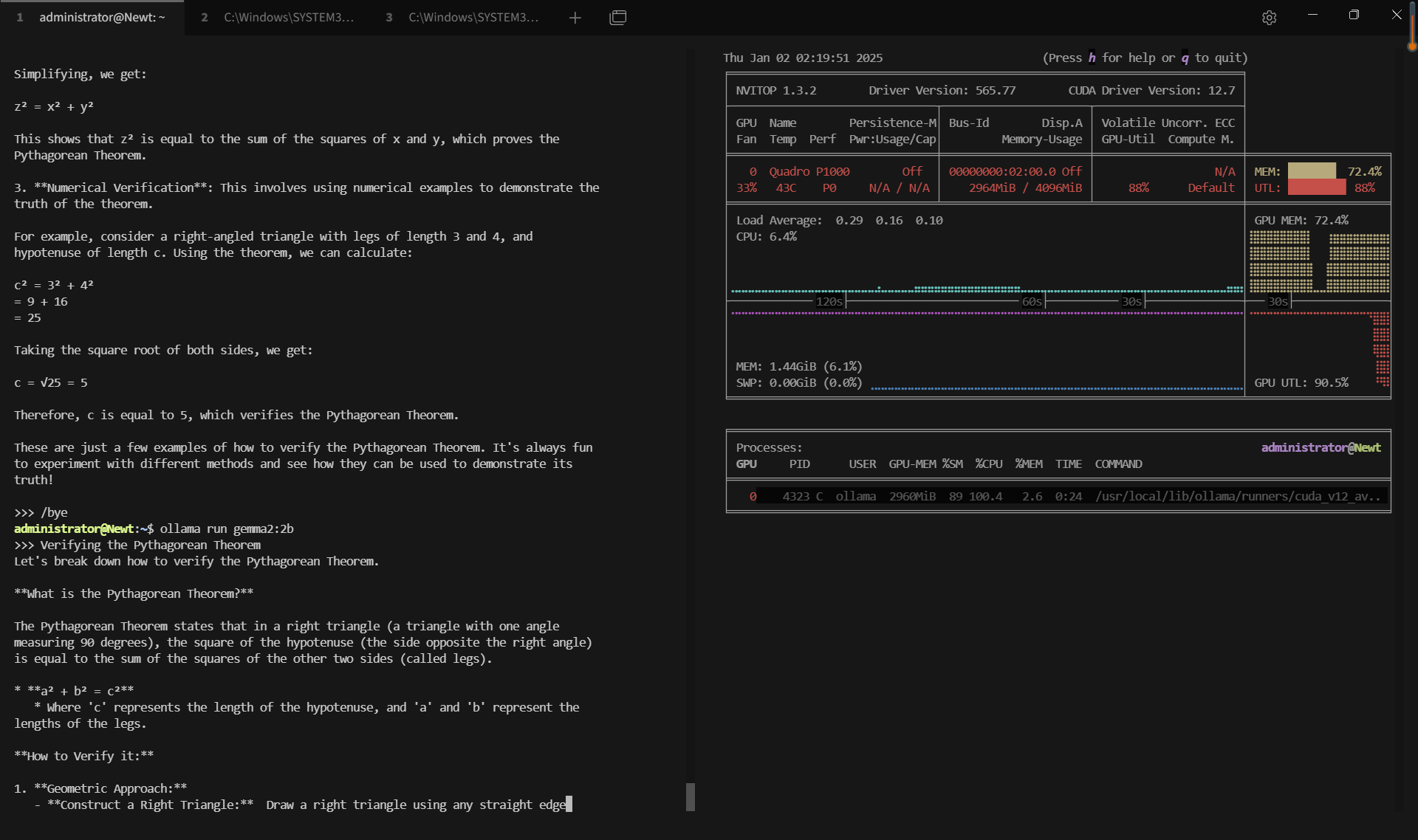

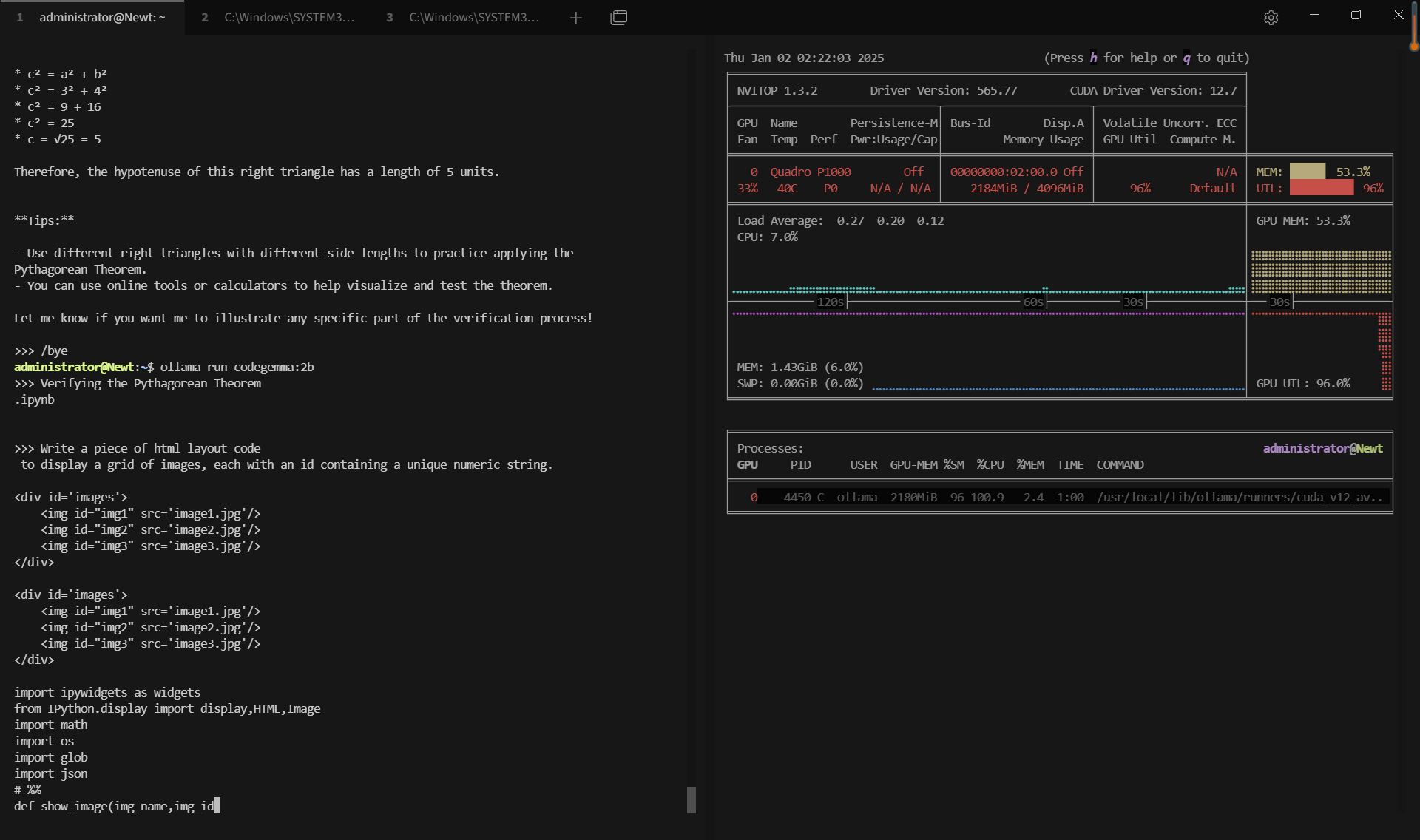

| GPU vRAM | 51.9% | 80.2% | 72.4% | 53.4% | 20% | 37.2% | 60.8% | 33.2% | 74% |

| GPU UTL | 92% | 95% | 89% | 96% | 80% | 89% | 95% | 93% | 97% |

| Eval Rate(tokens/s) | 28.90 | 19.97 | 19.46 | 30.59 | 54.78 | 34.43 | 17.92 | 62.33 | 18.87 |

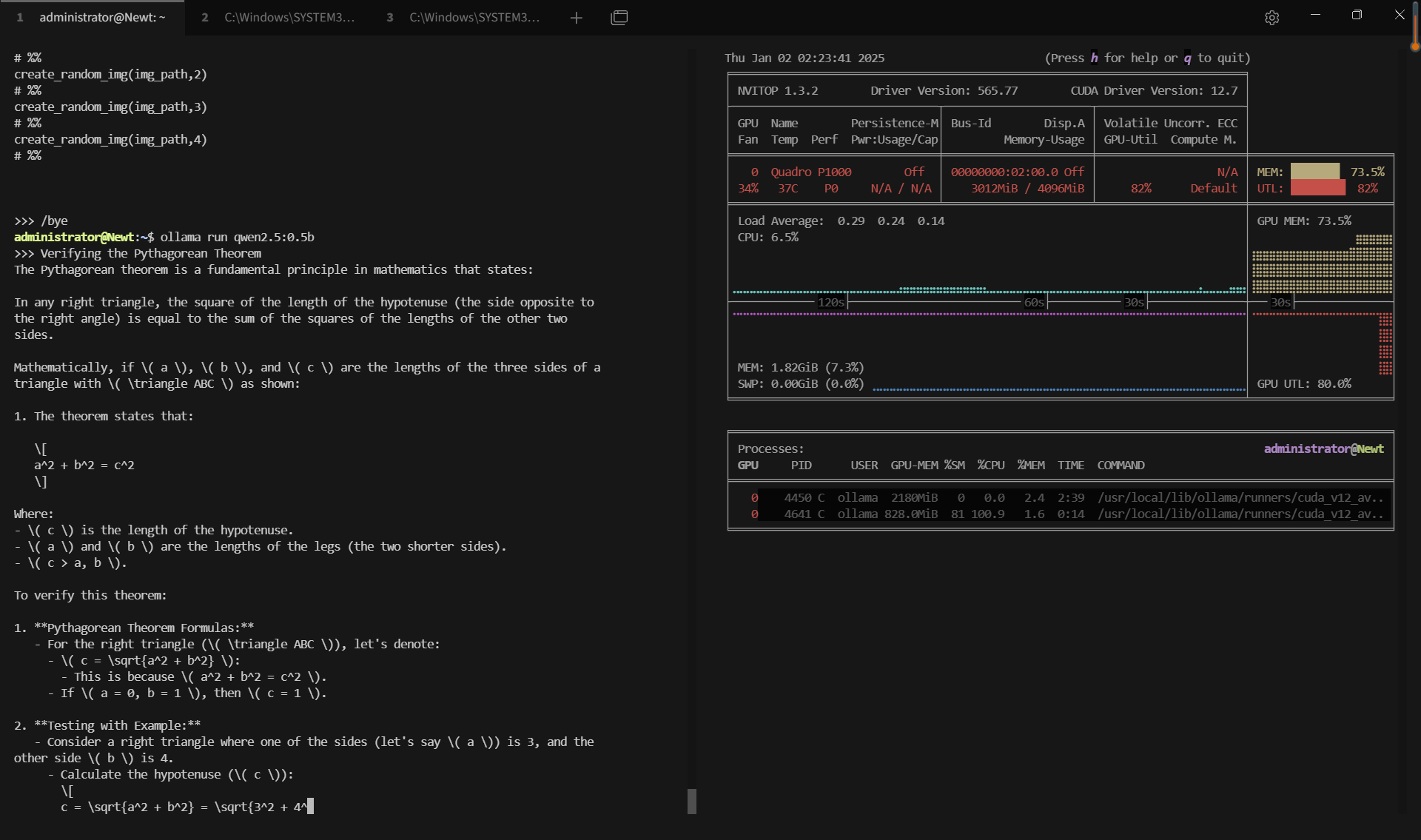

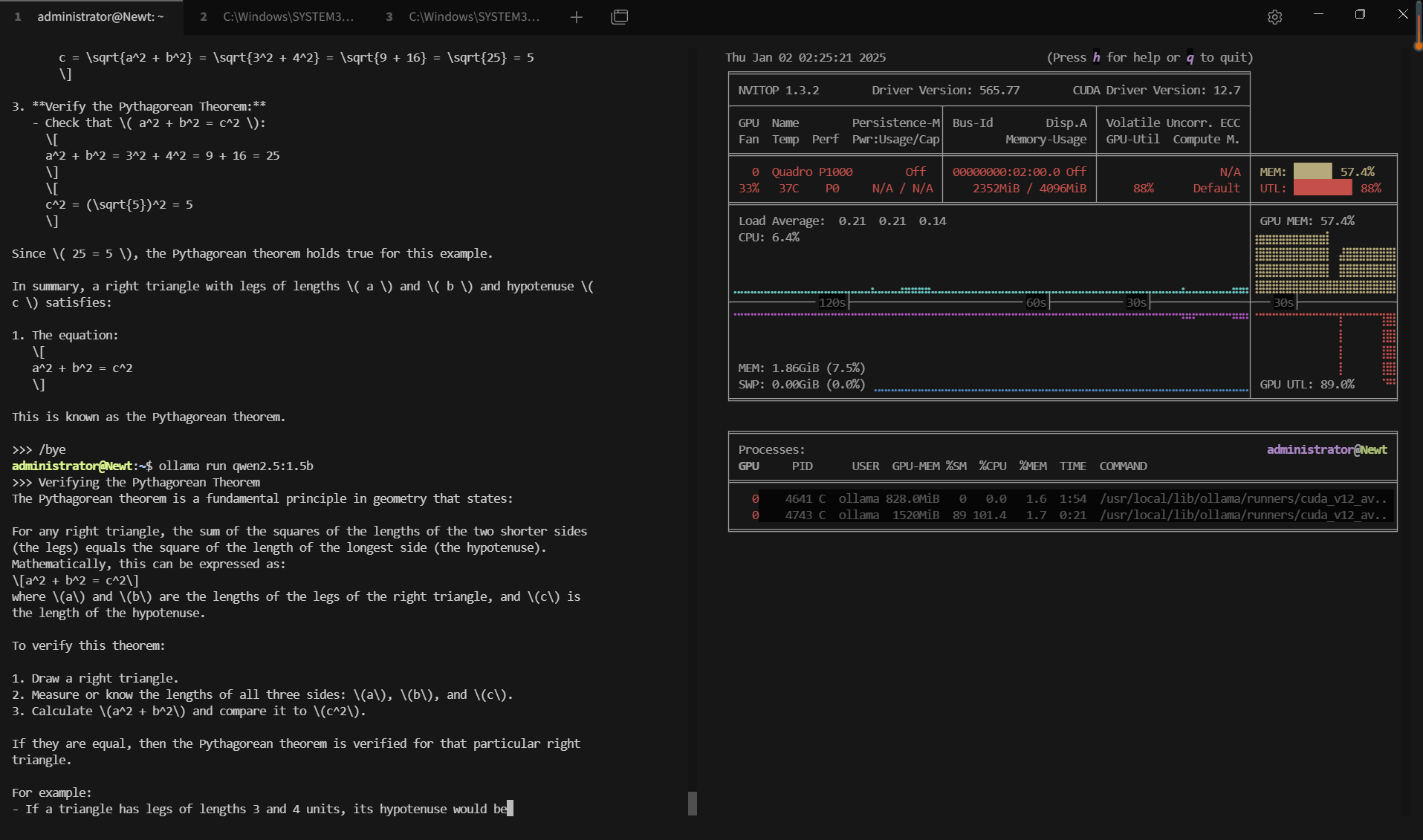

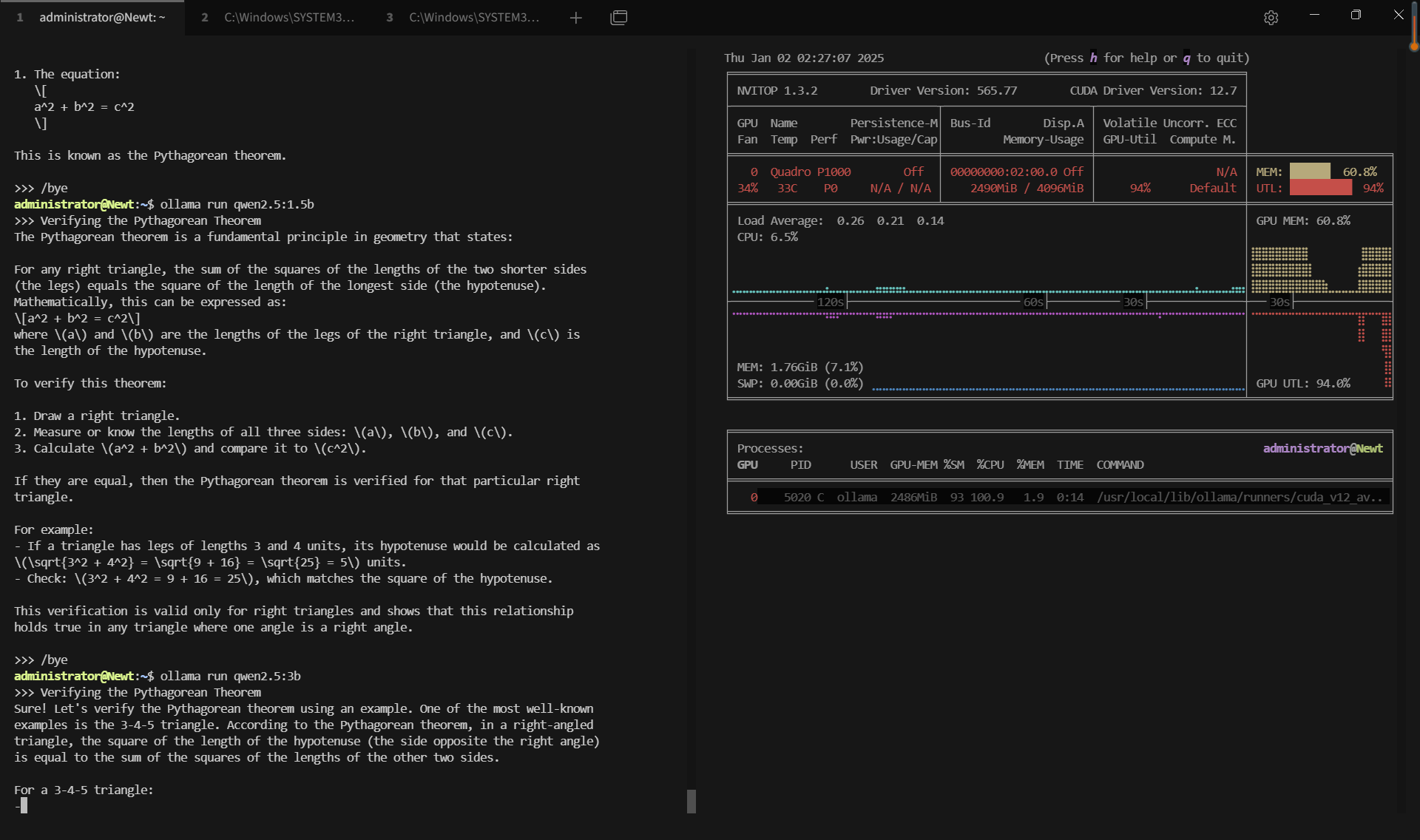

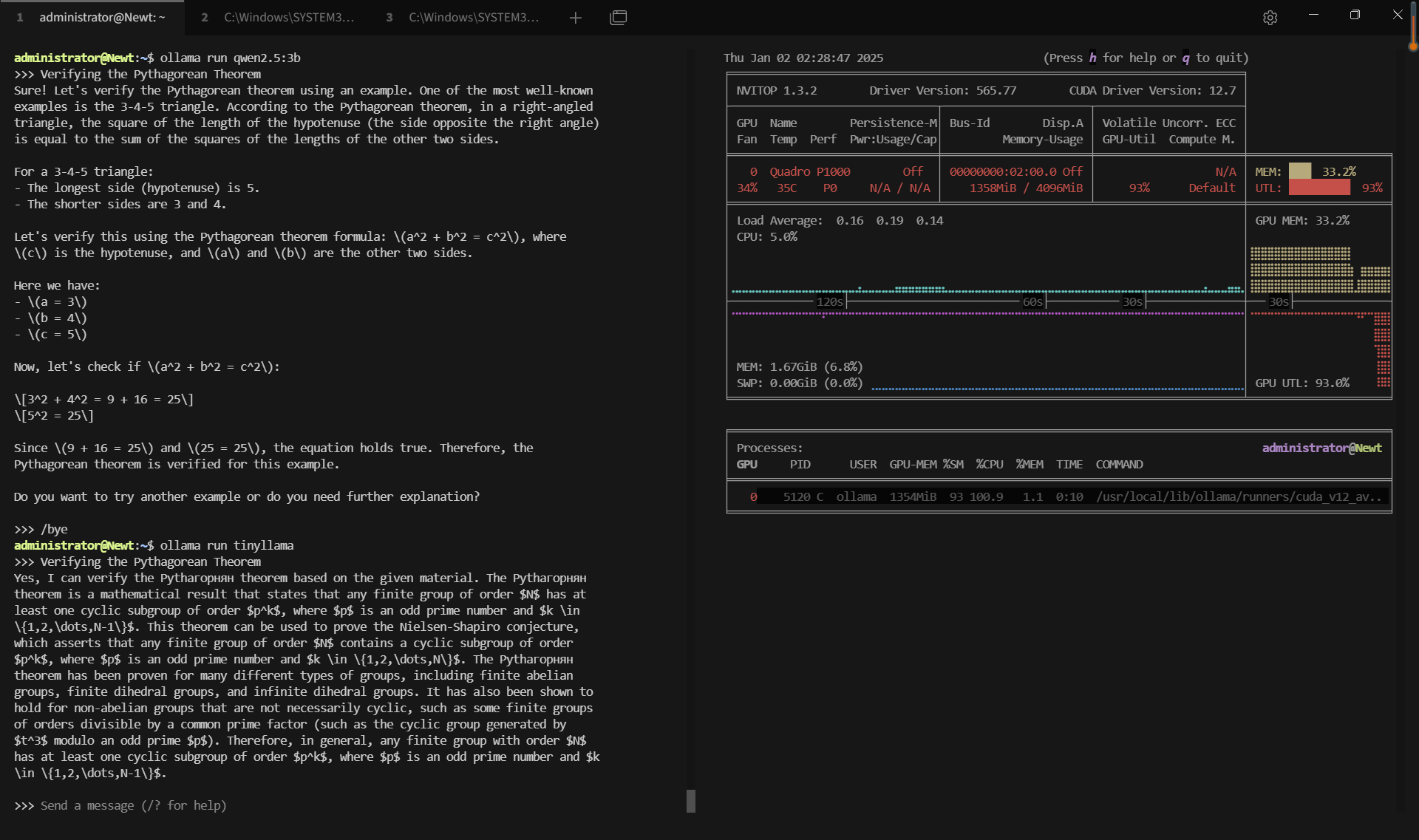

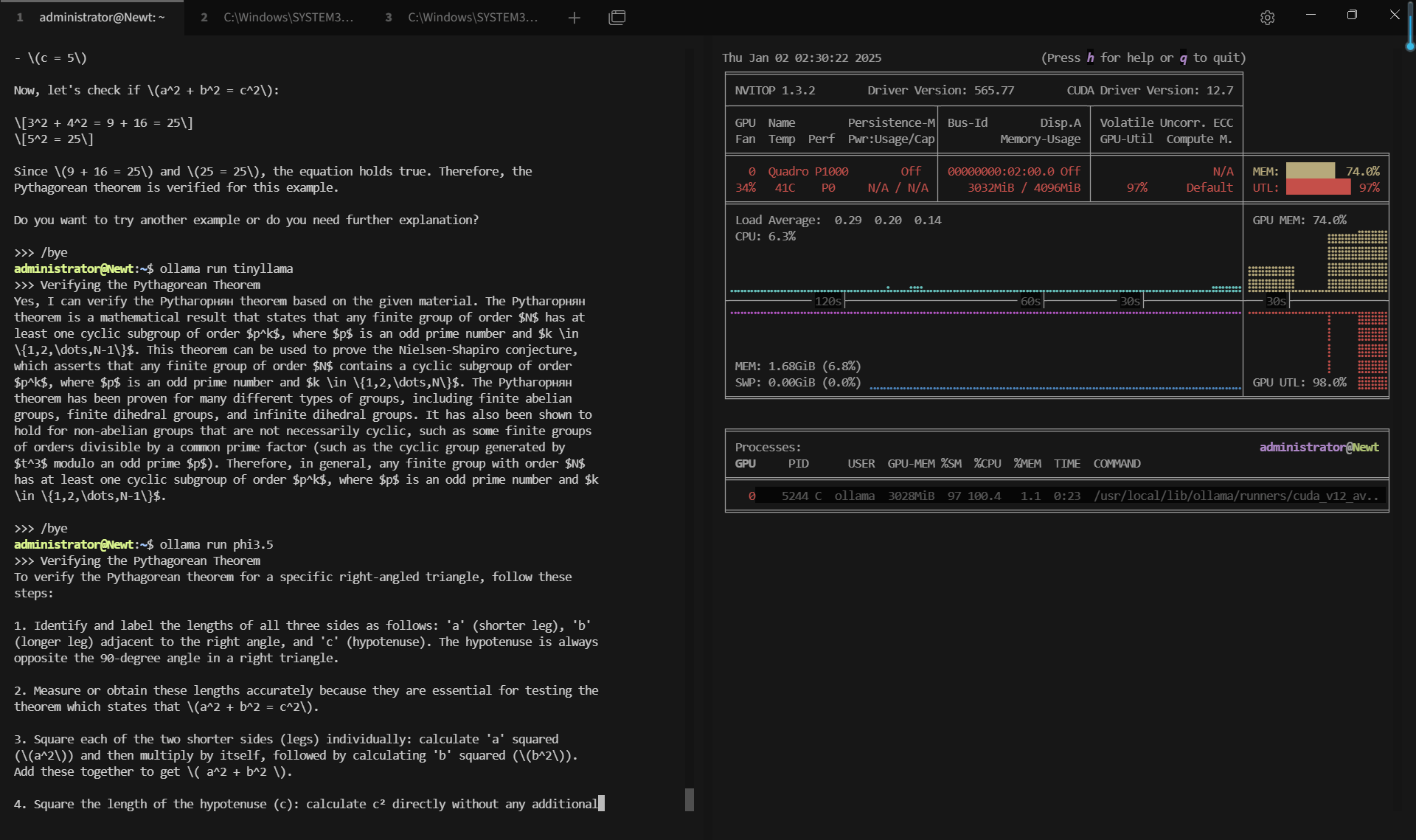

Record real-time gpu server resource consumption data:

Screen shoots: Click to enlarge and view

Key Takeaways

1. Impressive GPU Efficiency on Limited Resources

Despite being equipped with a mid-range GPU like the Nvidia Quadro P1000, Ollama demonstrated excellent GPU utilization (89-97%) across all tested models. The server’s 4GB of GPU memory was sufficient to handle models of up to 3.8B parameters, thanks to Ollama’s 4-bit quantization.

2. Evaluation Rates Vary by Model Size

Lighter models, such as TinyLlama (1.1B parameters), achieved an evaluation speed of 62.33 tokens/s, making them suitable for low-latency applications. Heavier models like Phi3.5 (3.8B parameters) and Llama3.2-3B processed at 18-20 tokens/s, balancing computational demands with performance.

3. Minimal CPU and RAM Overheads

CPU and RAM utilization remained exceptionally low, even for larger models. This leaves room for additional workloads on the same server without compromising performance.

| Metric | Value for Various Models |

|---|---|

| Downloading Speed | 11 MB/s for all models |

| CPU Utilization Rate | Ranged from 6.3% to 6.7% across all models |

| RAM Utilization Rate | Consistently between 4% and 5.4% |

| GPU vRAM Utilization | 20% (Qwen2.5) to 80.2% (Llama3.2-3B) |

| GPU Utilization | Ranged from 89% to 97%, showcasing high GPU efficiency |

| Evaluation Speed | Spanned from 17.92 tokens/s (Qwen2.5) to 62.33 tokens/s (TinyLlama) |

Use Cases for P1000 GPU Servers with Ollama

- Edge AI Deployments: For businesses seeking cost-efficient deployment of AI applications on mid-range servers.

- LLM Testing and Prototyping: Developers can test different models under constrained GPU environments, gaining valuable insights into their behavior before scaling to larger infrastructure.

- Educational Purposes: Universities and labs can use such setups to train students or perform small-scale research projects on LLMs.

📢 Get Started with P1000 GPU Hosting for LLMs

If you're looking to optimize your AI reasoning tasks for 0.5b~4b, explore our P1000 dedicated server hosting options today!

Flash Sale to May 27

Express GPU Dedicated Server - P1000

$ 37.00/mo

50% OFF Recurring (Was $74.00)

1mo3mo12mo24mo

Order Now- 32GB RAM

- Eight-Core Xeon E5-2690

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: Nvidia Quadro P1000

- Microarchitecture: Pascal

- CUDA Cores: 640

- GPU Memory: 4GB GDDR5

- FP32 Performance: 1.894 TFLOPS

Flash Sale to May 27

Basic GPU Dedicated Server - T1000

$ 59.50/mo

50% OFF Recurring (Was $119.00)

1mo3mo12mo24mo

Order Now- 64GB RAM

- Eight-Core Xeon E5-2690

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: Nvidia Quadro T1000

- Microarchitecture: Turing

- CUDA Cores: 896

- GPU Memory: 8GB GDDR6

- FP32 Performance: 2.5 TFLOPS

- Ideal for Light Gaming, Remote Design, Android Emulators, and Entry-Level AI Tasks, etc

Flash Sale to May 27

Basic GPU Dedicated Server - GTX 1650

$ 79.20/mo

33% OFF Recurring (Was $119.00)

1mo3mo12mo24mo

Order Now- 64GB RAM

- Eight-Core Xeon E5-2667v3

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: Nvidia GeForce GTX 1650

- Microarchitecture: Turing

- CUDA Cores: 896

- GPU Memory: 4GB GDDR5

- FP32 Performance: 3.0 TFLOPS

Flash Sale to May 27

Basic GPU Dedicated Server - GTX 1660

$ 92.00/mo

42% OFF Recurring (Was $159.00)

1mo3mo12mo24mo

Order Now- 64GB RAM

- Dual 10-Core Xeon E5-2660v2

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- GPU: Nvidia GeForce GTX 1660

- Microarchitecture: Turing

- CUDA Cores: 1408

- GPU Memory: 6GB GDDR6

- FP32 Performance: 5.0 TFLOPS

Conclusion: Optimizing Ollama for GPU Servers

This benchmark demonstrates that Ollama can efficiently leverage a Pascal-based Nvidia Quadro P1000 GPU, even under constrained memory conditions. While not designed for high-end data center applications, servers like this provide a practical solution for testing, development, and smaller-scale LLM deployments.

If you're considering deploying Ollama on similar hardware, ensure proper quantization settings and monitor GPU utilization to maximize throughput. For larger models or production use, upgrading to a GPU with higher memory capacity (e.g., 8GB or 16GB) will provide better performance.